Incident Report: September 27, 2021 - Delays in Workflows

Summary:

On September 27, 2021, from 07:05 to 14:46 UTC CircleCI customers experienced delays in workflows. Due to our efforts to fix the initial incident, some customers were unable to access the UI throughout the incident. Simultaneously, AWS EBS experienced an outage in US-EAST, which degraded our MongoDB databases, causing further delays. We want to thank our customers for your patience and understanding as we worked to resolve this incident.

The original status page can be found here.

Contributing Events:

A third-party configuration event inadvertently triggered an overly excessive amount of workflows in quick succession which resulted in a spike of auto-cancellations being sent to our system. The large number of auto-cancellations caused slowness as requests flooded our job queues and datastores. The overall impact of this was that customers experienced delays starting workflows and jobs.

The second event which contributed to this outage was an AWS incident specific to EBS volumes. This left some of our MongoDB volumes in a degraded state which led to instability within our MongoDB clusters.

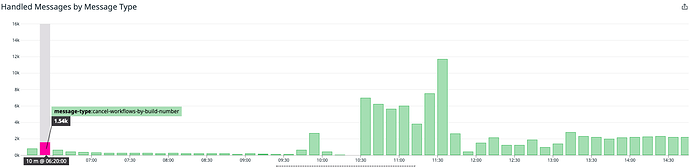

The graph below shows the increase of messages queued throughout the incident.

Future prevention:

Our engineering teams who handled this incident have identified a few areas where we can make some immediate improvements, in addition to planning some more medium-term work. The immediate improvements are around further enforcing concurrency limits to how we run pipelines, as well as adding further rate limits to how we handle API calls and webhooks.

In response to the AWS EBS incident, we have already completed some work to better handle failovers within our MongoDB cluster.

What Happened

(all times UTC)

On September 27th at 6:20 UTC, there was a large spike in messages to our “cancel workflows” messages on the workflows queue.

The graph below shows the initial spike in cancel-workflows-by-build-number messages and the resulting backlog throughout the incident.

At 6:32 we were alerted that the workflows queue was not draining fast enough. After investigating, we identified that all customer workflows were impacted with delays at 7:08. At this time, we were still investigating why the queue was backed up.

At 7:24 we identified the spike in cancellation messages. At 7:36, we scaled up the workflows orchestration service to speed up draining the queue. Shortly after, we noticed that we were no longer receiving an elevated volume of cancellation messages.

At 7:47 we started investigating the potential reasons for the initial spike. At 7:55 we identified a third-party integration that mistakenly created a large number of workflows. Our feature for the auto-cancellation of redundant builds tried to cancel these, causing delays for all workflows messages.

At 8:13 we began updating the impacted workflows orchestration service Kubernetes pods to stop auto-cancellation. Between 8:13-8:44, the update was applied to all pods. Cancellation messages started trending down, but jobs were still not running. Shortly after, the MongoDB replication lag began growing.

At 8:58, all of the impacted Kubernetes pods were updated but cancellation queries continued to spike. Upon investigation, some pods had restarted in which they had reverted to the original version, without the update. A second update was applied to return the expected data shape. By 9:29, the deployments of some service instances kept failing due to overload and reverted to the original code. We removed liveness probes to stop workflows pods from restarting. This was expected to alleviate pressure on MongoDB too.

However, by 9:45 one of our MongoDB was “impaired” and was unclear if it was related to an incident for AWS US-EAST occurring at the same time. The workflows orchestration service was scaled down to alleviate pressure, a MongoDB replica was elected primary, but due to replication lag, we had a rollback to 8:47. By 10:19 we scaled workflows orchestration service back up and shortly after confirmed that MongoDB was healthy, with the exception of the data rollback.

At 10:25 we noticed messages from the problematic workflows that were being handled beyond the point we expected them to be dropped. The team worked on a new update and confirmed by 11:08 that we were able to process all of these cancellation messages. At 11:36 jobs were being processed and the Nomad cluster scaled up to handle the influx of jobs. We continued to scale to better handle the load and updated our status page to “monitoring” at 12:11 as we processed the backlog of workflows.

While monitoring, we noticed some jobs taking nearly 20 seconds to start and found this was due to some unrelated automated checks, which were quickly disabled. By 13:22 the orchestration service’s CPU was back to normal. At 13:44 the workflows queue was drained and at 14:07 we were back to normal operations with some delay in machine jobs and status updates. At 14:46 we declared the incident as resolved.